Cognitive Speech-to-Text Recognition Setup and Configuration [VCS 21.4 UG]

For this guide we assume that you have a VidiCore instance running, either as a VaaS running inside VidiNet or as a standalone VidiCore instance. You should also have an Amazon S3 storage connected to your VidiCore with some video content encoded as mp4.

Launching service

Before you can start transcribing your video content, you need to launch a Speech-to-Text service from the store in the VidiNet dashboard.

Automatic service attachment

If you are running your VidiCore instance as a Vidicore-as-a-Service in VidiNet you have the option to automatically connect your service to your VidiCore by choosing it from the presented drop down during service launch.

Manual service attachment

When launching a media service in VidiNet you will get a ResourceDocument looking something like this:

<ResourceDocument xmlns="http://xml.vidispine.com/schema/vidispine">

<vidinet>

<url>vidinet://aaaaaaa-bbbb-cccc-eeee-fffffffffff:AAAAAAAAAAAAAAAAAAAA@aaaaaaa-bbbb-cccc-eeee-fffffffffff</url>

<endpoint>https://services.vidinet.nu</endpoint>

<type>COGNITIVE_SERVICE</type>

</vidinet>

</ResourceDocument>Register the VidiNet service with your VidiCore instance by posting the ResourceDocument to the following API endpoint:

POST /API/resource/vidinetVerifying service attachment

To verify that your new service has been connected to your VidiCore instance you can send a GET request to the VidiNet resource endpoint.

GET /API/resource/vidinetYou will receive a response containing the names, the status, and an identifier for each VidiNet media service e.g. VX-10. Take note of the identifier for the Text-to-Speech service as we will use it later. You should also be able to see any VidiCore instances connected to your Speech-to-Text service in the VidiNet dashboard.

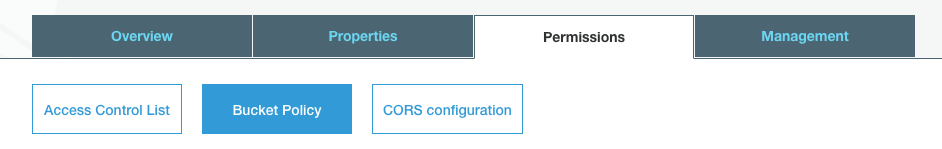

Amazon S3 bucket configuration

Before you can start a transcription job you need to allow a VidiNet IAM account read access to your S3 bucket. Attach the following bucket policy to your Amazon S3 bucket:

{

"Version":"2012-10-17",

"Statement":[

{

"Effect":"Allow",

"Principal":{

"AWS":"arn:aws:iam::823635665685:user/cognitive-service"

},

"Action":"s3:GetObject",

"Resource":[

"arn:aws:s3:::{your-bucket-name}/*"

]

}

]

}Running a transcription job

To start a transcription job on an item using the Speech-to-Text service we just registered, perform the following API call to your VidiCore instance:

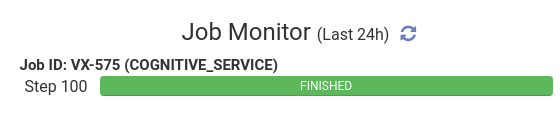

POST /API/item/{itemId}/analyze?resourceId={vidinet-resource-id}where {itemId} is the items identifier e.g. VX-46 and {vidinet-resource-id} is the identifier of the VidiNet service that you previously added e.g. VX-10. You will get a JobId in the reponse which you can use to query the VidiCore API Job endpoint /API/job for the status of the job or check it in the VidiNet dashboard.

When the job has completed, the resulting metadata can be read from the item using for instance:

GET /API/item/{itemId}/metadataA small part of the result may look something like this:

{

"field": [],

"group": [

{

"name": "adu_transcript_AWSTranscribeAnalyzerTransformer",

"field": [],

"group": [

{

"name": "adu_av_analyzedValue",

"field": [

{

"name": "adu_av_value",

"value": [

{

"value": "I have been expecting you Mr. Bond.",

"uuid": "aaaaaaaa-bbbb-cccc-eeee-ffffffff",

"user": "admin",

"timestamp": "2020-01-01T00:00:00.000+0000",

}

]

}

]

}

]

}

],

"start": "118@PAL",

"end": "134@PAL"

}which tells us that the phrase “I have been expecting you Mr. Bond.” was spoken between frames 118 and 134.

Configuration parameters

You can pass additional parameters to the service such as language and segment length. You pass these parameters as query parameters to VidiCore. For instance if our input media was in German and we wanted to create metadata segments that are 10 seconds long we could perform the following API call:

POST /API/item/{itemId}/analyze?resourceId={vidinet-resource-id}&jobmetadata=cognitive_service_Language=de-DE&jobmetadata=cognitive_service_LengthOfSegmentInSec=10See table below for all parameters that can be passed to the Speech-to-Text service and their default values. Parameter names must be prefixed by cognitive_service_.

Parameter name | Default value | Description |

|---|---|---|

Language | EnUS | Language to transcribe. Available languages can be found here |

LengthOfSegmentInSec | 5 | Group transcribed words into time spans of x seconds |

.png)